The Problem

The tech talent war is intensifying. Sign-on bonuses at AI labs now exceed $1-2M for top researchers. The global developer shortage is projected to reach 85 million workers by 2030. Time-to-hire for senior engineers averages 49 days - and companies lose top candidates to competitors during those lengthy processes.

The sourcing problem sits at the heart of this. GitHub hosts the world's largest collection of developer work samples, but there's no efficient way to search it. Recruiters manually scroll through profiles, trying to assess candidates from scattered signals - contribution graphs, pinned repos, commit histories. The information exists, but extracting it takes 15-20 minutes per profile.

Problem Analysis

This project started at a hackathon focused on the tech recruiting crisis. Before building, we mapped out the core pain points in the sourcing workflow:

- Discovery gap: GitHub search returns usernames, not qualified candidates. There's no way to query "Rust developers in Sydney who contribute to infrastructure projects" - you just get keyword matches.

- Signal extraction: The real indicators of engineering quality - merged PRs to popular projects, code review patterns, collaboration networks - require manual investigation. Pinned repos and star counts are often misleading.

- Context collapse: A green contribution graph shows activity, but not quality. 1,000 commits to personal toy projects means something different than 50 merged PRs to React or Kubernetes.

- Credential inflation: 73% of recruiters say finding qualified tech talent is their biggest challenge - separating genuine skill from inflated credentials requires looking at actual work output.

Design Goals

From this analysis, we identified three requirements for a hackathon-viable solution:

1. Semantic Search Over Developers

Enable natural language queries that understand intent. "TypeScript developers who contribute to testing frameworks" should return ranked results, not keyword matches.

2. Automated Signal Extraction

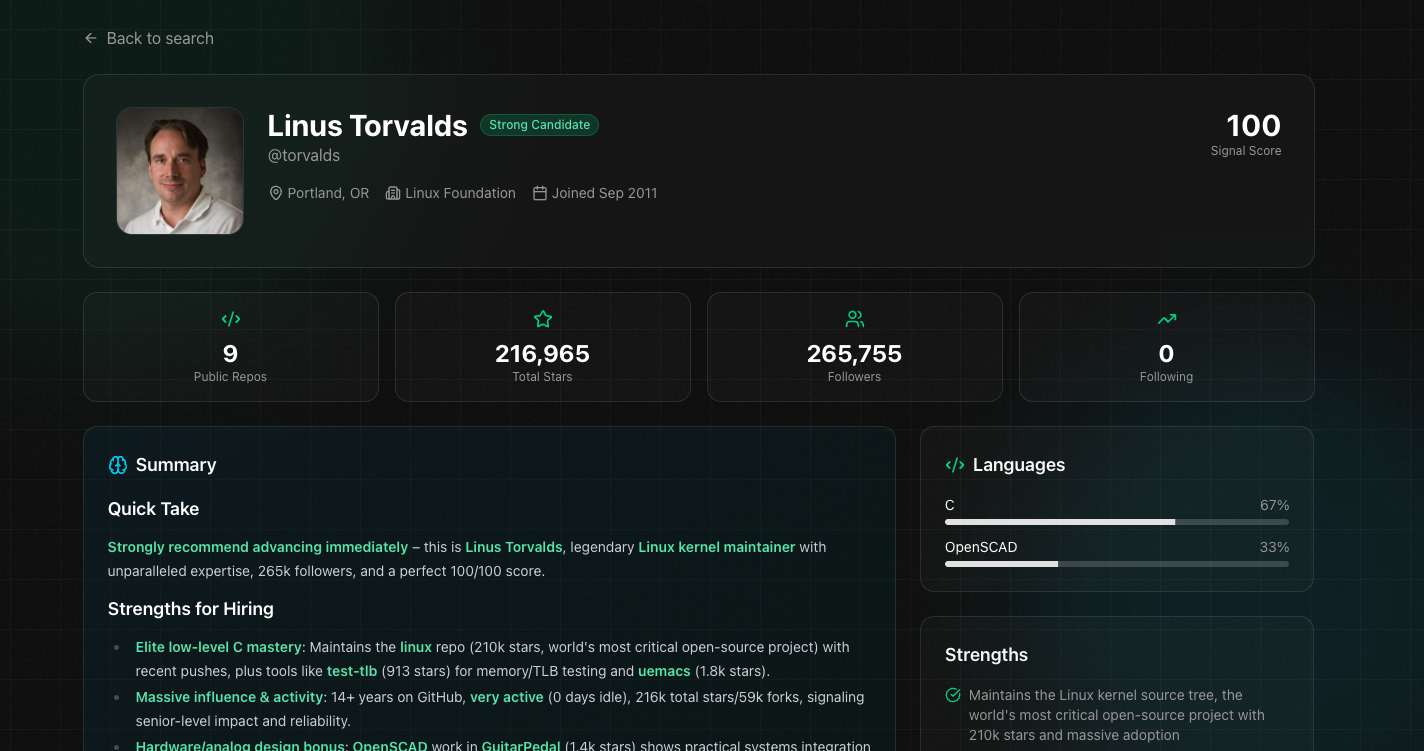

Transform 20 minutes of manual profile review into instant analysis. Surface language proficiency, contribution quality, collaboration patterns, and activity trends automatically.

3. Quality-Weighted Scoring

Move beyond vanity metrics. Weight maintained projects over abandoned ones, external contributions over self-owned repos, recent activity over historical.

The Solution

The idea: treat GitHub like a database of developer signals. Contribution graphs, language distribution, commit patterns, PR review activity, collaboration networks - there's a lot of structured data buried in profiles that's tedious to extract manually.

Git Radar indexes this data and exposes it through a semantic search layer. Query "Rust developers in Sydney with open source contributions" and get ranked results with profile breakdowns, not a list of usernames.

System Design

The indexing pipeline fetches profile data through the GitHub API, extracts features (repos, commits, PRs, languages, collaborators), and stores structured embeddings in PostgreSQL with pgvector. The semantic search layer uses Exa for query understanding, which handles the translation from natural language to vector similarity search.

Profile analysis runs through a dual-model setup: Claude handles the structured extraction (skill classification, experience level inference, contribution quality scoring) while Grok powers the more generative features. The Vercel AI SDK abstracts the model switching - same interface, different capabilities depending on the task.

The collaboration graph visualization was the most interesting frontend problem. D3's force simulation handles the physics, but I wrapped it with React Flow for the interaction layer - pan, zoom, node selection, edge highlighting. The tricky part was tuning the force parameters to produce readable layouts across different network densities without manual positioning.

Implementation Notes

GitHub's API rate limits (5,000 requests/hour authenticated) meant the indexing pipeline needed to be async and resumable. I implemented a job queue with Upstash Redis that handles backpressure automatically - when we hit rate limits, jobs get rescheduled with exponential backoff. The queue also deduplicates requests, so fetching the same profile twice within the cache window is a no-op.

For the LLM integration, I use Zod schemas with the AI SDK's structured output mode. The model returns typed objects that match the frontend's expected shape, which eliminates the parsing/validation layer I'd otherwise need. When the schema changes, TypeScript catches the mismatch at build time rather than runtime.

The Signal Score algorithm weights recent activity higher than historical, with decay curves that differ by contribution type. A merged PR from last month matters more than one from three years ago, but a maintained project with consistent commits over years scores higher than a burst of activity followed by abandonment. The weights were tuned against manual rankings of ~200 profiles.

Tech Stack

Frontend

- Next.js 16, React 19

- Tailwind CSS v4

- D3.js, React Flow

Backend

- PostgreSQL, pgvector, Drizzle

- Upstash Redis

- Supabase Auth

AI

- Claude, Grok

- Vercel AI SDK

- Exa Search

Infrastructure

- Vercel

- GitHub OAuth